https://www.cnblogs.com/wormday/p/8435617.html

创建Spring MVC项目

File -> new -> Project… -> Spring -> Spring MVC

配置

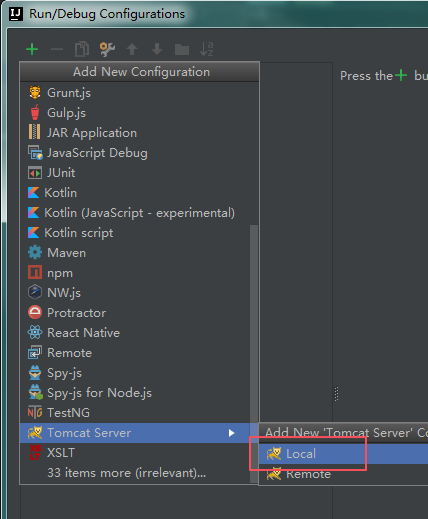

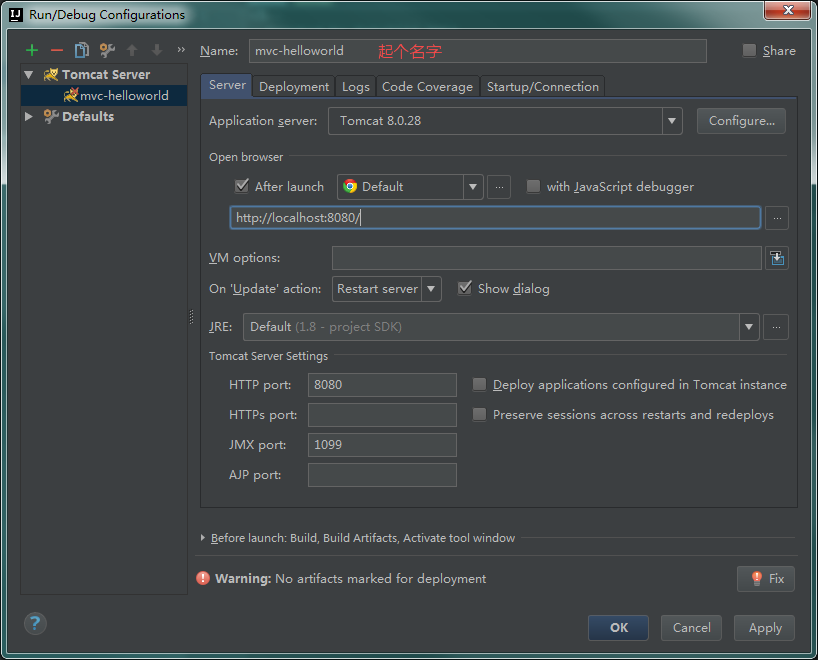

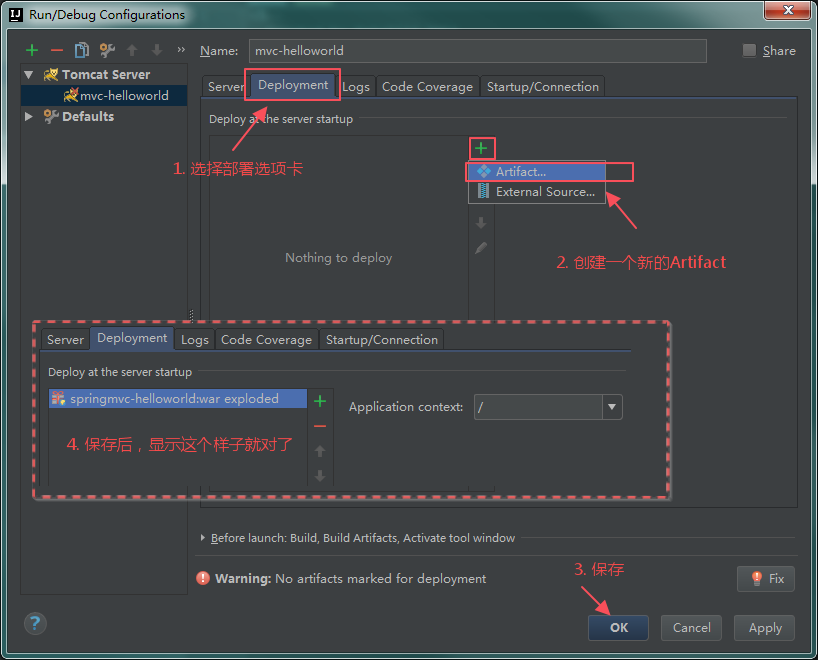

run -> Edit Configurations…

配置

File -> Project Structure…

运行

浏览器输入地址 http://localhost:8080/index.jsp

添加Controller

站点可以打开了,不过我们这个不是MVC,因为没有M、没有V也没有C

我们就从MVC中的C(Controller)开始,继续配置

在新建Controller之前,首先要建一个包,SpringMVC是没法运行在默认包下的,按照如下方式建包,

我建的包名称为:test

其实包名随意,但是必须要有。。。

再这个包下新建Java Class文件 MyController

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 37 38 39 | |

也可以只把注解写在方法上,比如@RequestMapping(“mvc/hello”)

这个Controller的Action 地址应该是:

http://localhost:8080/mvc/hello.form 其实这个时候访问结果是404,因为后边还有不少配置没有做…

修改 url-pattern(web.xml)

先打开web\WEB-INF\web.xml文件

有关于ServletMapping的设置,通过这个设置,可以配置那些类型的url用那些servlet来处理

1 2 3 4 5 6 7 8 9 | |

结合这一段xml,我们可以看到,IDEA默认帮我配置了一个名字叫做dispatcher的Servlet

这个Servlet使用org.springframework.web.servlet.DispatcherServlet这个类来处理

这个Servlet对应的Url是*.form

如果你跟我一样不喜欢每个MVC Url后边都带一个form,可以改成斜杠

1

| |

如果你现在重新启动程序,然后继续访问http://localhost:8080/mvc/hello

发现,依旧404,并且伴随每次访问,都在Server的Output窗口有一个错误日志

org.springframework.web.servlet.PageNotFound.noHandlerFound No mapping found for HTTP request with URI [/mvc/hello] in DispatcherServlet with name ‘dispatcher’

意思就是没有找到相应的Controller,不但要把Controller的代码写好,还要告诉Spring(在这里其实是dispatcher servlet)去哪里找这些Controller。。。

作为验证,你可以在Controller里边加一个断点,然后刷新页面,程序根本就没有执行到Controller里边

配置 component-scan(dispatcher-servlet.xml)

component-scan就是告诉Servlet去哪里找到相应的Controller

打开 dispatcher-servlet.xml

在已经存在的

1

| |

再加上view前后缀

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24 | |

base-package指定的就是存放Controller的包

做完这一步之后,重新启动项目,再次访问 http://localhost:8080/mvc/hello

这次应该还是404错误,不过比刚才的404错误前进了一大步

毕竟这次Controller已经执行了,如果刚才的断点没有去掉,你可以验证一下看看

这一回是因为是“hello”这个View找不到(我们刚才确实只是告诉他这个位置,但是从来没有创建过这个文件)

添加视图文件(.jsp)

在 web/WEB-INF/jsp 下创建 hello.jsp

1 2 3 4 5 6 7 8 9 | |

通过 Model 向 View 传值

通过上面的操作,已经完成了MVC中的(V和C),M还没见影子,让我们继续修改

打开刚才定义的Controller 也就是 MyController.java文件

增加 ui.Model

打开copy来的项目时

要在 File -> Project Structure… -> Project Settings -> Project -> SDK -> new sdk -> JDK 主路经: /usr/lib/jvm/jdk-15.0.1

err1: Unsupported class file major version 57

File -> Settings…

Build, Execution, Deployment -> Compiler -> Java Compiler 修改 project bytecode version

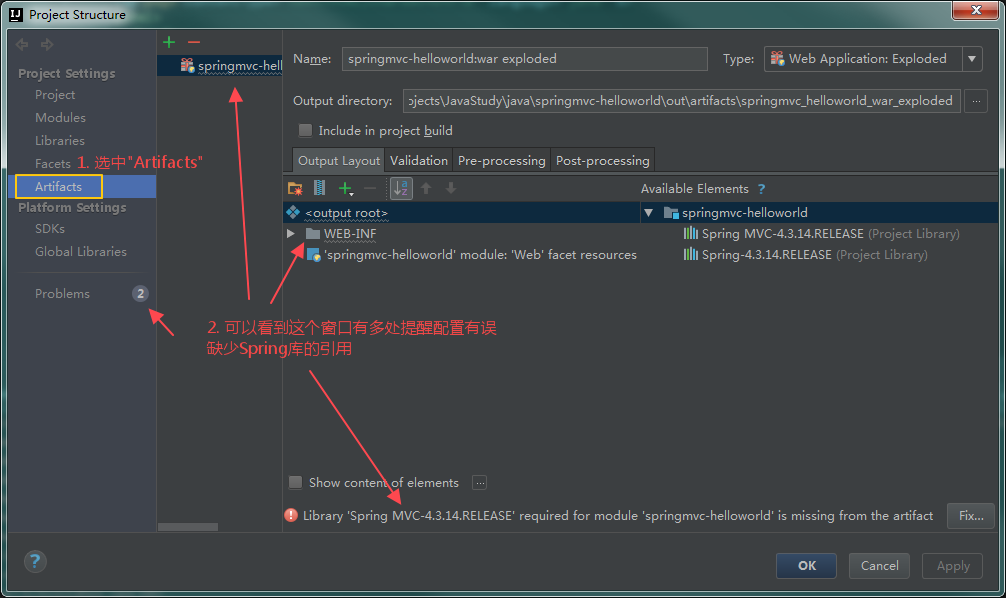

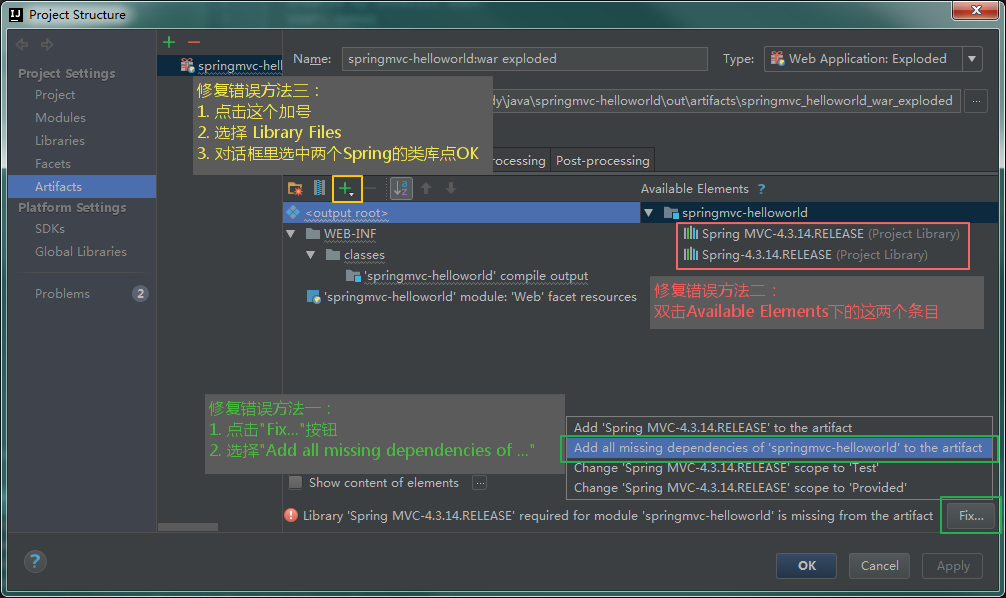

err2: java.lang.ClassNotFoundException: org.springframework.web.context.ContextLoaderListener

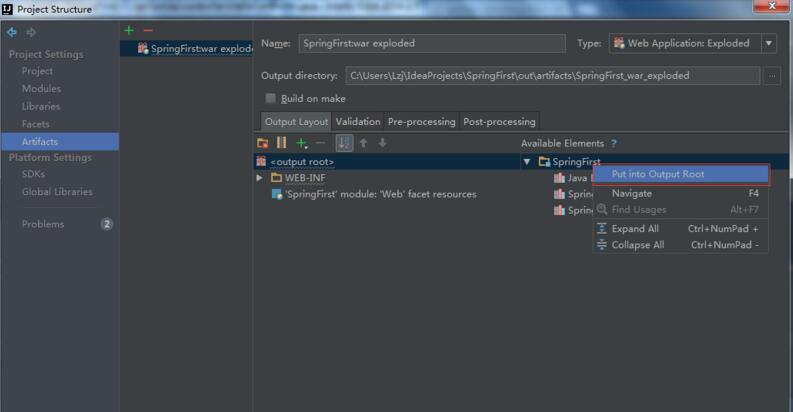

在IDEA中点击File > Project Structure > Artifacts > 在右侧Output Layout右击项目名,选择Put into Output Root。

执行后,在WEB-INF在增加了lib目录,里面是项目引用的jar包,点击OK。